Two first-year lab courses one in biology, one in materials quietly hinge on the same skill: the ability to turn messy observations into stepwise procedures that scale. That shared skill is computational thinking, and it answers a pragmatic question many students ask: why is computational thinking important for STEM students? Because it shortens the path from a problem statement to a reliable, repeatable solution, whether you are aligning gene sequences or optimizing a truss.

If you want a concise answer: computational thinking (CT) makes STEM work faster to reproduce, cheaper to scale, and easier to verify. Below, you’ll see what CT is in concrete terms, how it shows up in core disciplines, what the evidence and limits look like, and how to build CT without sacrificing scientific depth.

What Computational Thinking Actually Comprises

CT is not a synonym for “coding.” It is a toolbox of practices that include decomposition (breaking problems into units with clear inputs and outputs), pattern recognition (finding invariants or repeated structure), abstraction (choosing representations that hide noise but preserve signal), algorithm design (specifying exact steps and termination criteria), and evaluation (measuring correctness, complexity, and error). In physics this looks like non-dimensionalizing equations; in chemistry, specifying reaction sequences as state transitions; in engineering, turning requirements into constraint sets.

Mechanistically, CT turns raw data into decisions through a four-stage loop: represent, operate, check, and iterate. Suppose you model bacterial growth. You pick a representation (discrete time steps with a logistic equation), operate (simulate with Δt = 0.1 hours), check (compare residuals against plate counts; target mean absolute percentage error under 10%), and iterate (adjust carrying capacity or time step). The same loop drives circuit simulation, where nodal voltages are states, Kirchhoff’s laws define operations, SPICE checks stability, and mesh refinements reduce error.

There are real trade-offs. Abstraction accelerates insight but can hide edge cases (e.g., neglecting hysteresis in material models). Algorithmic choices impose resource constraints: a naive O(n²) clustering on 200,000 points can be 100–1,000 times slower than an O(n log n) alternative; that difference decides whether an analysis runs in minutes or overnight. Discretization choices impose stability and accuracy thresholds: too-large time steps can violate physical constraints (e.g., negative concentrations), too-small steps can blow up compute time without meaningful gain.

Jeannette Wing: computational thinking is “solving problems, designing systems, and understanding human behavior by drawing on the concepts fundamental to computer science.”

Direct Payoffs Across STEM Disciplines

Engineering design lives on search and constraints. A truss optimization with 10⁶ candidate members is intractable by intuition alone; CT frames the task as a constrained search with heuristics (greedy elimination, genetic algorithms) and evaluation metrics (stress ratios, weight penalties). Practical result: engineers prune design spaces by orders of magnitude, then validate winners with finite element analysis. Mesh granularity becomes a tunable parameter, not guesswork: start tetrahedral elements at 10 mm, refine where stress gradients exceed 5% per element until displacement changes fall below 1% between iterations.

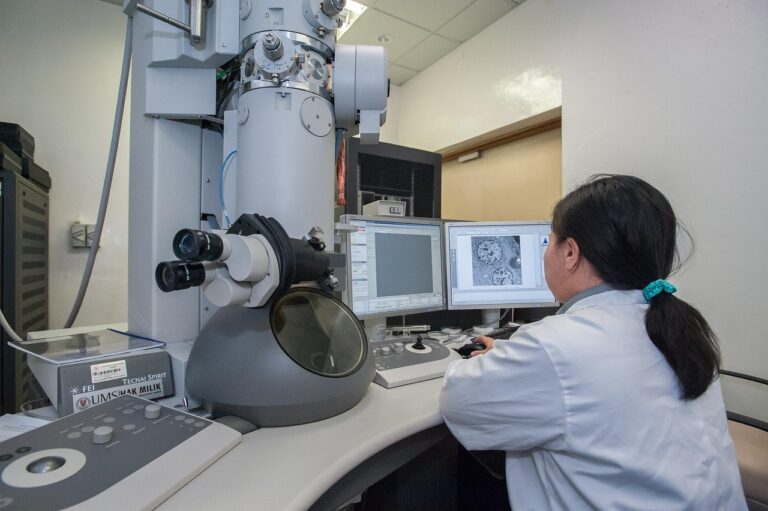

In biology, CT separates signal from noise at scale. A typical RNA-seq pipeline decomposes into alignment, quantification, normalization, differential testing, and multiple-hypothesis correction. Each step has algorithmic trade-offs. Exact alignment can be prohibitive; heuristics like seed-and-extend (e.g., BLAST-like approaches) trade a small, measurable loss in sensitivity for 10–100× speedups, which matters when you have 10⁷ reads per sample. CT also enforces reproducibility: a scripted pipeline with fixed random seeds and a manifest of software versions can be rerun by a collaborator in under an hour, whereas a manual spreadsheet workflow is hard to audit and easy to corrupt.

Physics and chemistry rely on modeling and numerical stability. CT shows up when choosing among solvers: explicit methods are simple and parallelizable but constrained by stability limits (e.g., the Courant–Friedrichs–Lewy condition), whereas implicit methods permit larger time steps at the cost of solving linear systems each iteration. CT guides the decision by quantifying error tolerance, wall-clock time, and memory budgets. It also enforces unit safety: treating units as types (like a static analysis pass) catches dimensional mistakes before they become invalid results.

Data-centric work in STEM benefits from CT’s emphasis on designs that are computable and testable. Consider an A/B test for sensor firmware. CT leads you to predefine metrics (false-positive rate under 2%), randomization schemes (block randomization by device batch), and stopping rules (minimum 1,000 events per arm). You then implement the analysis as a script with a single entry point and a “replay” mode that reruns on historical data. The payoff is not just speed; it reduces researcher degrees of freedom, narrowing the risk of p-hacking and making negative results more informative.

What The Evidence Shows (And What It Doesn’t)

Educational research on CT is growing but not uniform. Small controlled studies in K–12 and early undergraduate settings often report improvements in problem-solving or math achievement after CT-infused instruction, but sample sizes are frequently modest and interventions vary widely. Effects are typically small to moderate, and transfer is strongest when CT tasks closely mirror the target domain (e.g., algorithmic stoichiometry practice helping with chemical reaction balancing). Evidence is mixed when CT is taught abstractly and expected to generalize automatically to physics or biology without explicit mapping.

In the workforce, demand signals are clearer. Roles that combine domain expertise with algorithmic reasoning bioinformatics analysts, computational materials scientists, controls engineers consistently outpace average occupational growth. Over a decade, many such roles post double-digit percentage growth and offer salary premiums relative to purely domain or purely software roles, especially in regulated or data-rich industries. The size of the premium varies by region and sector, but employers repeatedly reward the ability to formalize problems, automate analyses, and defend assumptions.

But CT is not a universal solvent. Transfer isn’t automatic: solving maze puzzles may not help with differential equations unless both are framed with shared representations (states, transitions, invariants). Over-automation can mask scientific reasoning: a black-box model that fits spectra may obscure mechanistic understanding unless you validate on counterfactuals or probe feature importance. There are also resource and ethical constraints. Training large models may exceed a lab’s GPU budget or energy limits; handling human data invokes privacy regulations. Goodhart’s law applies: once a metric (e.g., accuracy) becomes the target, CT demands additional guardrails (calibration, fairness, uncertainty quantification) to prevent gaming the metric at the expense of truth.

National Research Council: modeling and the use of computational tools are core scientific practices that help students “develop, evaluate, and revise scientific explanations.”

Building Computational Thinking Without Losing Core Science

Integrate CT where it naturally amplifies domain learning, not as a bolt-on. A workable pattern is to allocate 5–10% of course time to CT-infused labs that mirror existing content. In an introductory mechanics course, students can write a paper-and-pencil algorithm for projectile motion with drag, then translate to code. Start with a minimal stack: spreadsheets for data cleaning and Python notebooks for simulation. As a rule of thumb, move from spreadsheets to scripts when you exceed 50,000 rows, repeat an analysis more than twice, or need versioned provenance. Keep computational environments lightweight so a peer can reproduce results in under 10 minutes.

Assess CT explicitly. Rubrics can award points for decomposition (clearly defined inputs/outputs), correctness (tests that include edge cases and conservation checks), and complexity (explaining why O(n log n) is sufficient and what n means in context). Objective reproducibility checks are powerful: swap teams and require that each group runs the other’s analysis with new data and obtains results within a pre-agreed tolerance (for example, mean absolute error under 2% of baseline). Time-to-rerun is a practical metric; if a lab’s full analysis cannot be rerun start-to-finish in one class period, it’s too fragile.

Use tools that enforce good habits. Version control prevents analysis drift and makes errors auditable. Unit tests catch regressions in models (e.g., ensuring energy never increases in a damped oscillator). Aim for 60–80% test coverage in educational settings high enough to force thinking about edge cases without overwhelming students. Record data provenance with manifests (filenames, checksums, parameter seeds). For projects with human or sensitive data, set guardrails: de-identify, restrict exports, and include an ethics checklist touching consent, bias, and security.

Expect tangible, local wins. In a wet lab, automating plate-reader normalization and curve fitting typically saves on the order of 2–5 hours per week and reduces data-entry errors toward zero. In a field study, an outlier-detection algorithm on sensor streams (e.g., median absolute deviation with a 3–5× threshold) can cut false alarms by double-digit percentages without hurting recall. In a design course, writing a script to generate parametric CAD variants can compress a 20-option exploration into a single afternoon, revealing non-intuitive trade-offs (e.g., stiffness plateaus beyond a certain rib spacing).

Conclusion

For STEM students, computational thinking is the shortest route from “interesting idea” to “working, defensible result.” To put it into practice, adopt four habits: represent problems precisely, choose algorithms with explicit trade-offs, test everything (including assumptions), and script what you’ll need to repeat. If you can rerun your analysis in minutes, explain its limits in one paragraph, and adapt it to a slightly different problem without starting over, you are using CT the way modern science and engineering require.